Annotated Logical Fallacies Dataset for Training AI - Review

Contents

Logical Fallacy Detector and Dataset

A new paper published in Feb 2022 (v1) - May 2022 (v2) “Logical Fallacy Detector” by Zhijing Jin and others, has a dataset “Logic” of annotated logical fallacy examples as an open-source appendix. This dataset is available on https://github.com/causalNLP/logical-fallacy . In case the original repo is not online - the Sep 2022 copy of the repository is available on https://github.com/tmakesense/logical-fallacy/tree/main/original-logical-fallacy-by-causalNLP .

This publication arxiv:2202.13758 has on the top of the annotation page

Below is the original cleaning process described in the citation from the paper, section A.2

A.2 Data Filtering Details of LOGIC

The data automatically crawled from quiz websites contain lots of noises, so we conducted multiple filtering steps. The raw crawling by keyword matching such as “logic” and “fallacy” gives us 52K raw, unclean data samples, from which we filtered to 1.7K clean samples.

As not all of the automatically retrieved quizzes are in the form of “Identify the logical fallacy in this example: […]”, we remove all instances where the quiz question asks about irrelevant things such as the definition of a logical fallacies, or quiz questions with the keyword “logic” but in the context of other subjects such as logic circuits for electrical engineering, or pure math logic questions. This is done by writing several matching patterns. After several processing steps such as deleting duplicates, we end up with 7,389 quiz questions. Moreover, as there is some noise that cannot be easily filtered by pattern matching, we also manually go through the entire dataset to only keep sentences that contain examples of logical fallacies, but not other types of quizzes.

The entire cleaning process resulted in 1.7K high-quality logically fallacious claims in our dataset.

We open-source the dataset at https://github.com/causalNLP/logical-fallacy. As a reference, for each fallacy example we also release the URL of the source website where we extract this example from.

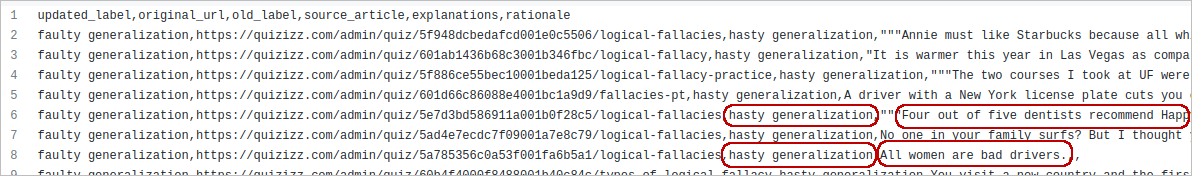

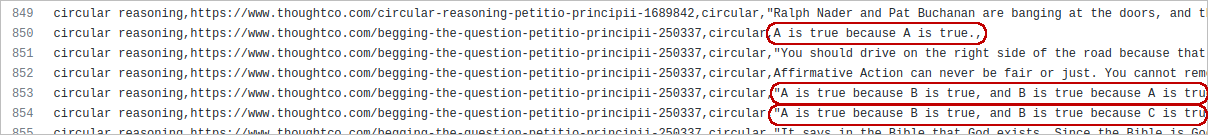

The logical fallacy dataset contains more than 2000 samples; it is a CSV file with the format like this below,

starting with Hasty Generalization.

The third column contains a logical fallacy name, and the fourth contains a text of an example.

Even though the authors note that examples are of high quality, in this review, we clarify why the quality is not so high. Several issues must be fixed in the dataset before having confidence in logical fallacy detection quality. Most of the issues are there because of a lack of dataset verification by a responsible person.

The fixed dataset “FixedLogic” is available on https://github.com/tmakesense/logical-fallacy/tree/main/dataset-fixed . It has the same as the original, open-source MIT license for non-commercial use. If you use FixedLogic or re-publish it, you would need to reference this repo and one with the original dataset.

Below is a review of errors in the available dataset, possible causes, and outcomes.

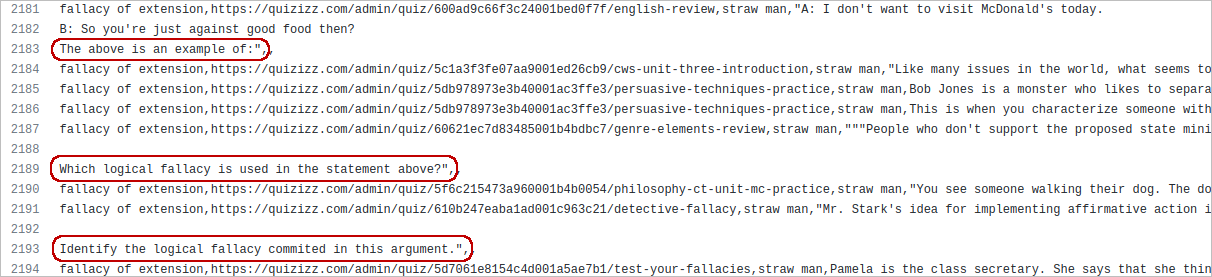

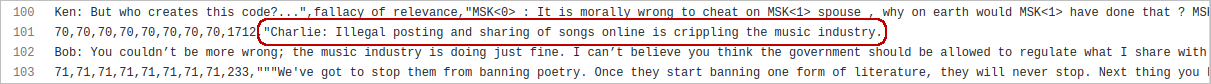

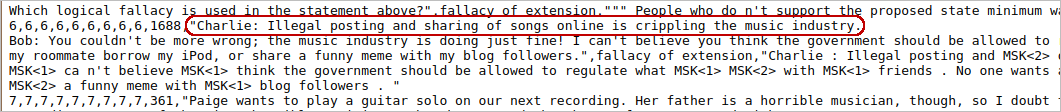

Question is a part of logical fallacy example

After scraping quizzes from the internet, these question text should have been removed in the cleaning stage. If they are ignored and kept, some AI models could think the question itself is an example of, in this case, Strawman logical fallacy and would detect false-positive straw man in tests and production - more than 60 such incorrect examples.

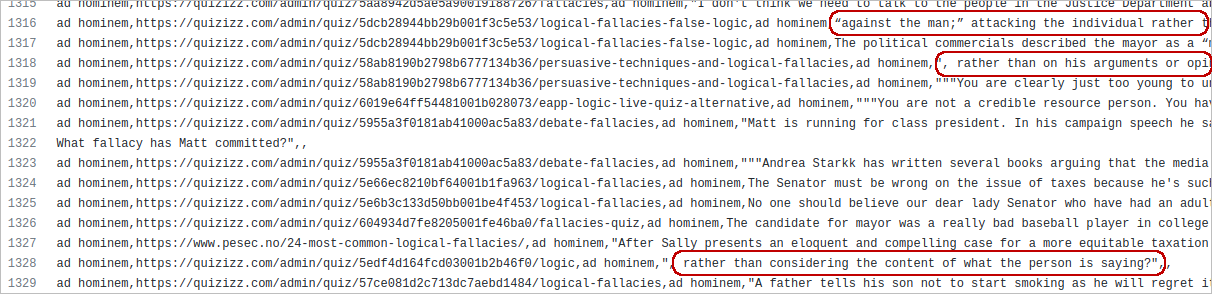

Definition is a part of logical fallacies examples

In the original logic dataset, sometimes there is a description or definition of the fallacy instead of an example. Such examples would confuse the model and train it to pay attention to incorrect text elements.

We think the formal form might suit as in example for Bandwagon Fallacy.

Idea X is popular. Therefore, X is correct

In some cases could look like overkill, though it covers all the bases. Here is the set of examples of Circular Reasoning Fallacy.

The important point here is in placing such models only into the training set, not to test or validation ones. If you use a combined dataset for your experiments, you might want to consider this before the split.

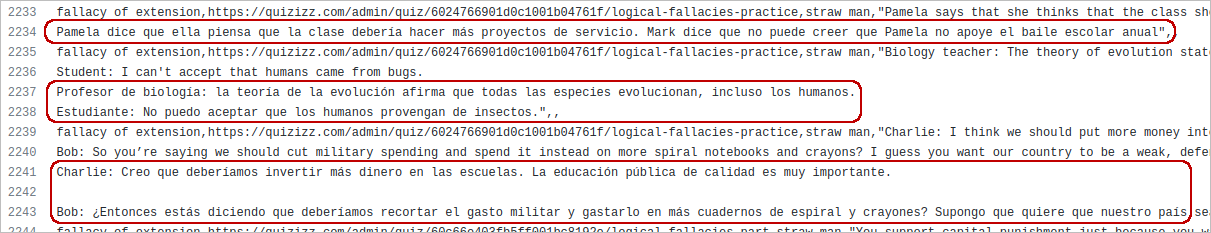

Spanish text in the example

In some cases, the Spanish translation of the fallacy is a part of the example. The same scraping and not cleaning or proofreading leads to the examples like

How does it affect the model detection rate? Probably in a good way, if you are training a polyglot logical fallacy detector. However, such examples could have some side effects for detectors specializing in English only. We edited such examples to have only the English part in the fixed dataset.

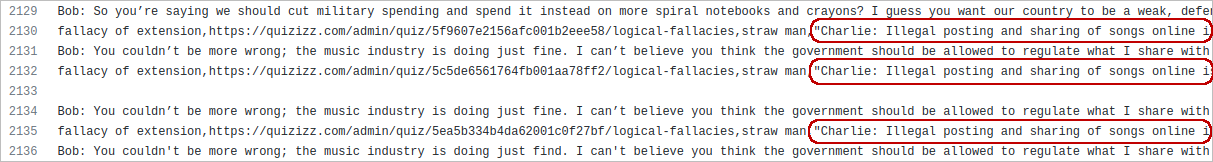

Duplicates of examples

If your dataset contains duplicates and you do not get rid of them during cleansing, this could lead to unreliable test results. For example, if you split the dataset into training and testing sets and in each of those will be the same sample (duplicate), then the test run of the model will always show it as detected correctly, or at least supposed to. It nicely increases the model’s detection rate and gives a false impression of model efficiency.

Here below is a part of edu-train.csv training set:

And here is a section of the test set from file edu-test.csv:

The source dataset contains a lot of such duplicates, more than 200. FixedLogic dataset already has these dups removed.

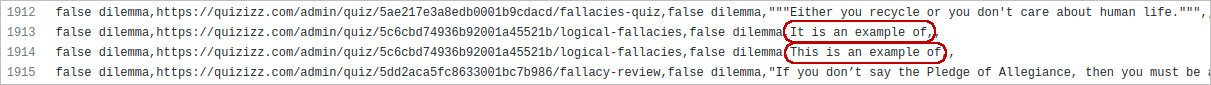

No example, just a question

Please see this extract below:

For sure, we can not say that this is a correct example of False Dilemma Fallacy. Does it mean that if the detector is trained on such a dataset, it will find a False Dilemma in your opinion, if you mention some example to prove your point? Better be removed.

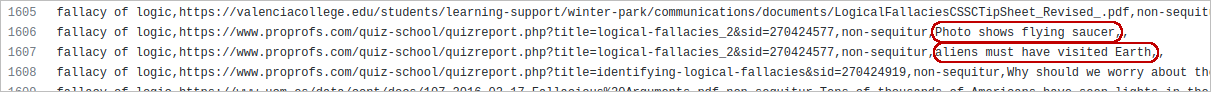

Plain incorrect examples

No, it doesn’t look like a non-sequitur. With a good imagination, it might look like Wishful Thinking.

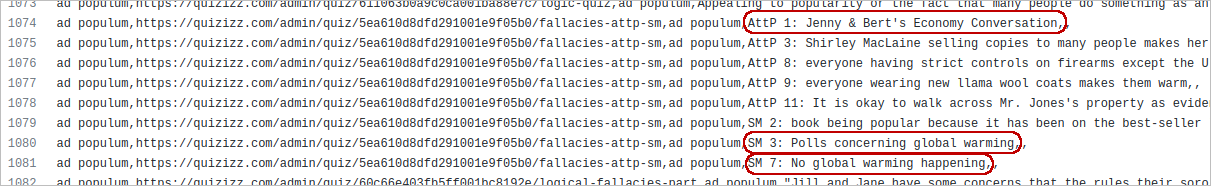

Some more non-cleaned data from quiz scraping. The last bit “No global warming happening” might help to increase the detection rate in ClimateLogic with some additional false positives. But this is not an example of Ad Populum. Need to remove these too.

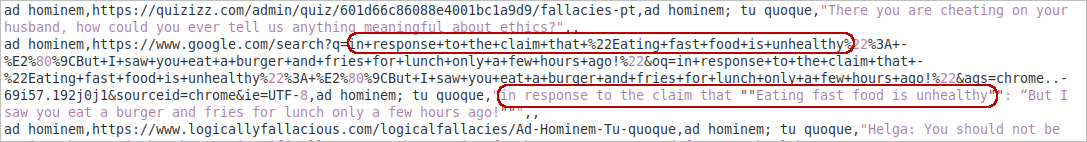

Hidden source

Basically, what citing that source for this sample says is

When I typed in google “in response to the claim that ‘Eating fast food is unhealthy…” , I accidentally found an example “in response to the claim that ‘Eating fast food is unhealthy…”

or to simplify

I found the text “Logical Fallacy” when I typed in the google search box: “Logical Fallacy”

Some kind of circular reference fallacy. The google search shows in results the original sites that contain the example - an article “18 Common Logical Fallacies and Persuasion Techniques” by Christopher Dwyer Ph.D., and the book “Persuasion: Subliminal Techniques to Influence and Inspire People” By Shevron Hirsch. Why does the dataset list google as a source here instead of Psychology Today? Mistery.

Conclusion not so positive

The Logic dataset presented with the article “Logical Fallacy Detector” contains 2453 annotated examples of logical fallacies. The dataset is very raw and unclean; in our opinion, approx. 10% of samples are incorrect. So many respectable people spent their time working on this.

We cleaned Logic of questionable items, and the final version of FixedLogic dataset has 2226 samples and is available: https://github.com/tmakesense/logical-fallacy/tree/main/dataset-fixed .

Conclusion positive

Anyway

- It’s a pleasure seeing the effort doing some research in this direction

- With minor effort in the dataset cleanup, the detection rate could be increased significantly

- With sample mini bert-based detector and fixed dataset, next experimentation will get a decent jumpstart.

Thank you for your attention

Follow us on Twitter, subscribe to and like our Facebook page, or write an email.

Contacts are at the bottom of the page.

See also: